Jim Audiomisc

pfm Member

If you go back to some of the older MQA papers then it appears that the first method is, in principle, allowed/supported. However, even these papers hint that when used as such, only the lowest frequencies of the 48-96kHz band get actually folded and encoded.

The second method, the one you have locked onto, indeed uses lazy downsampling on a 192k or 384k original, to obtain a 96k copy, which is then folded into 48k.

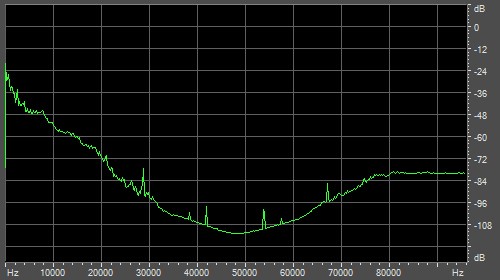

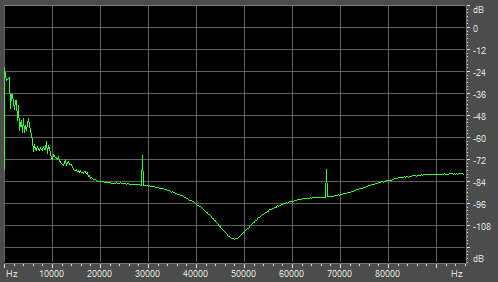

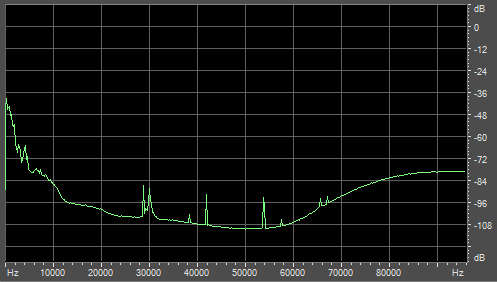

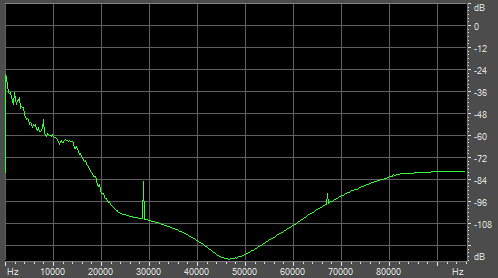

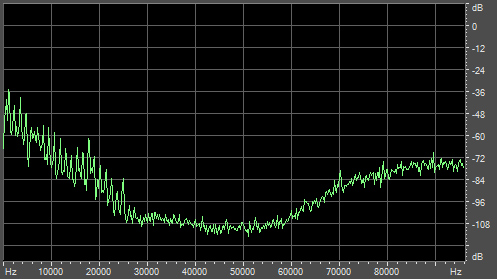

For what it is worth ... I have now analysed a fair amount of Tidal MQA tracks that light three LEDs on the Explorer2, i.e. tracks that announce themselves as of at least 192kHz sample rate.

What I see now is that, at the output of the E2, all of those tracks that contain enough high frequencies to allow me to observe what is happening, use lazy upsampling to extend from 96kHz to 192kHz and higher.

This seems to confirm that the aforementioned double-folding is in effect not used, or at least not often enough to show up in my sample tracks, and thus that MQA, in practice, only conveys the equivalent of 96k, not 192k, and certainly not 384k.

Right: So to make sure I'm following correctly. Am I correct to assume that your examinations show results indicating that, when encoding, they are:

First, using the 'lazy' downsampling (which I call 'origami') to go 192k -> 96k.

Then using what I call 'bistacking' to go 96k -> 48k.

And that although the 'use bitstacking twice' method is covered by their patents, etc, that doesn't look like what they're actually doing?

If so, that makes some sense to me as I'd expect it to be less damaging than trying to cascade bistacking.