Joe P

Memory Alpha incarnate | mod; Shatner number = 2

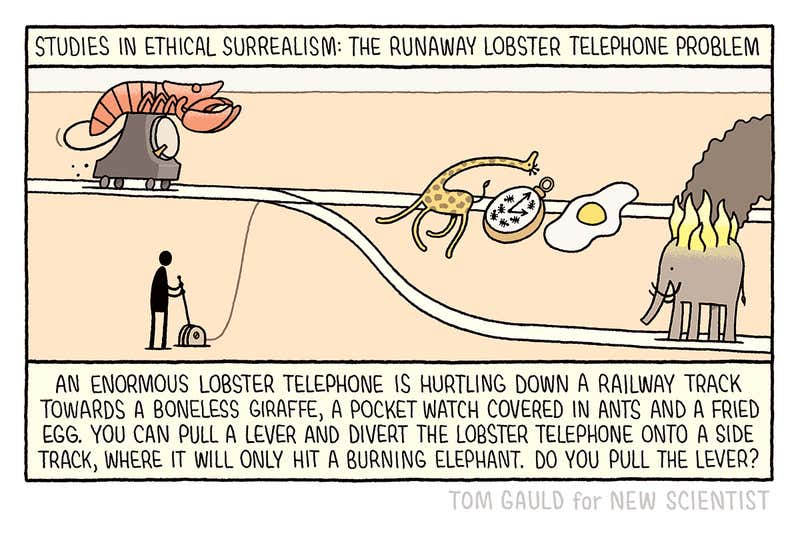

The thing with AI is that unlike humans it won’t panic or freeze when confronted with seemingly intractable ethical dilemmas.

However, the many layers of a deep neural network, assuming it has sufficient and appropriate training data, will look at this dilemma and conclude that Tom Gauld is odd but brilliant.

Joe

However, the many layers of a deep neural network, assuming it has sufficient and appropriate training data, will look at this dilemma and conclude that Tom Gauld is odd but brilliant.

Joe